- Pro

DGX racks integrate 600TB fast memory and NVMe storage per system

When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

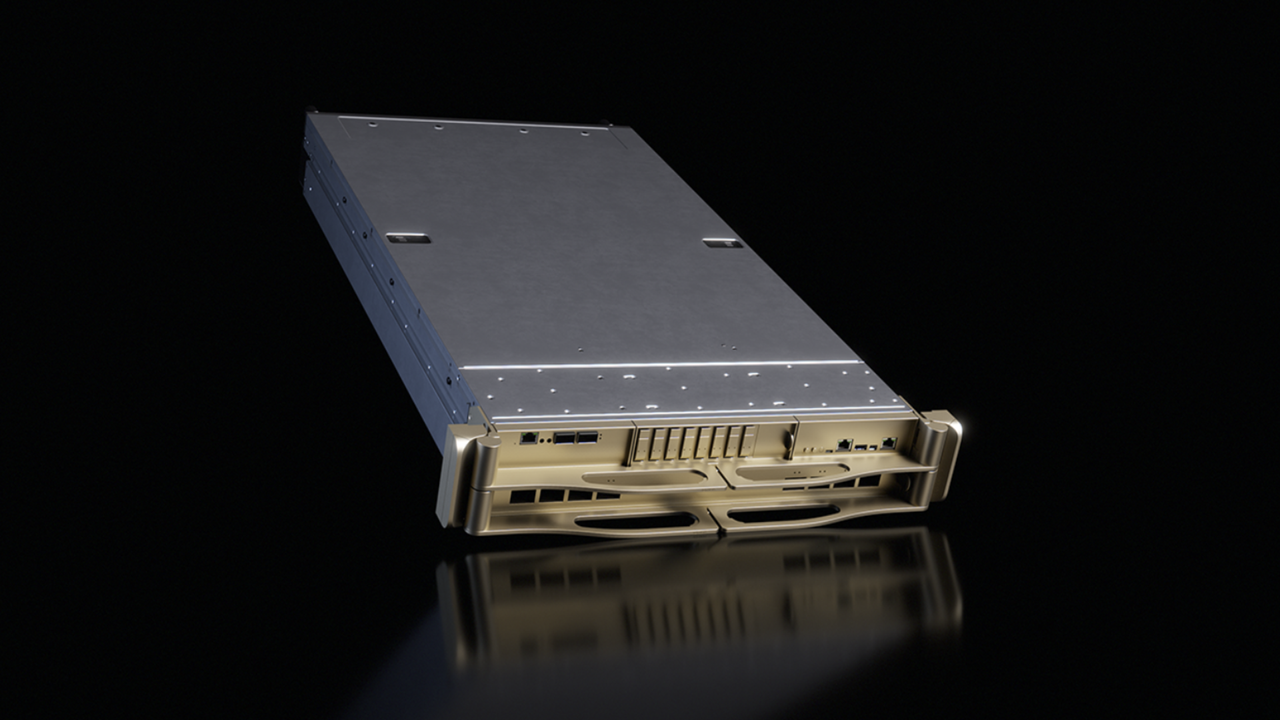

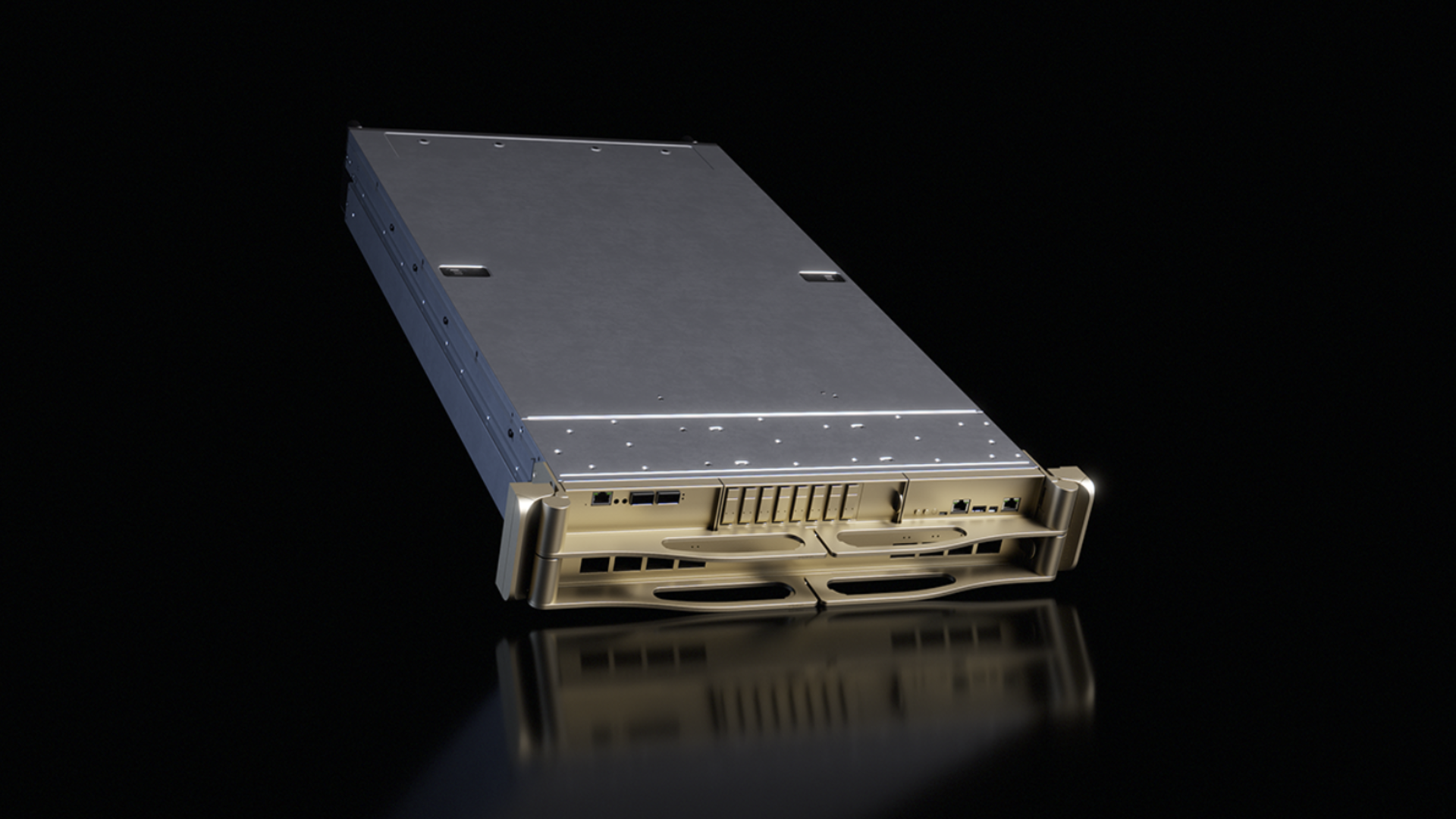

(Image credit: Nvidia)

Share

Share by:

(Image credit: Nvidia)

Share

Share by:

- Copy link

- X

- Threads

- Nvidia Rubin DGX SuperPOD delivers 28.8 Exaflops with only 576 GPUs

- Each NVL72 system combines 36 Vera CPUs, 72 Rubin GPUs, and 18 DPUs

- Aggregate NVLink throughput reaches 260TB/s per DGX rack for efficiency

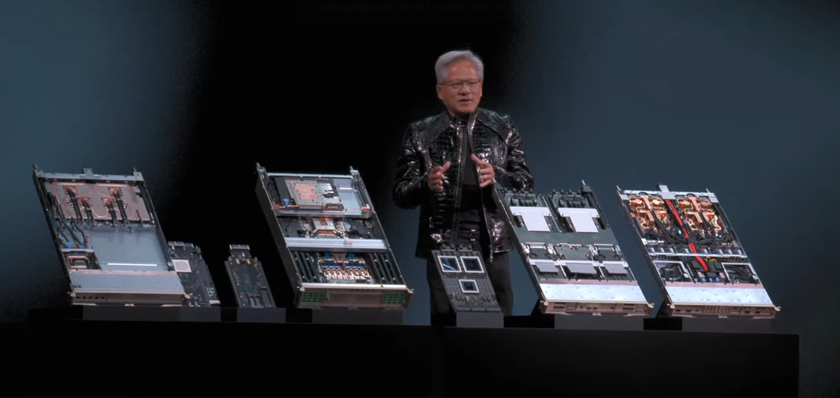

At CES 2026, Nvidia unveiled its next-generation DGX SuperPOD powered by the Rubin platform, a system designed to deliver extreme AI compute in dense, integrated racks.

According to the company, the SuperPOD integrates multiple Vera Rubin NVL72 or NVL8 systems into a single coherent AI engine, supporting large scale workloads with minimal infrastructure complexity.

With liquid cooled modules, high speed interconnects, and unified memory, the system targets institutions seeking maximum AI throughput and reduced latency.

You may like-

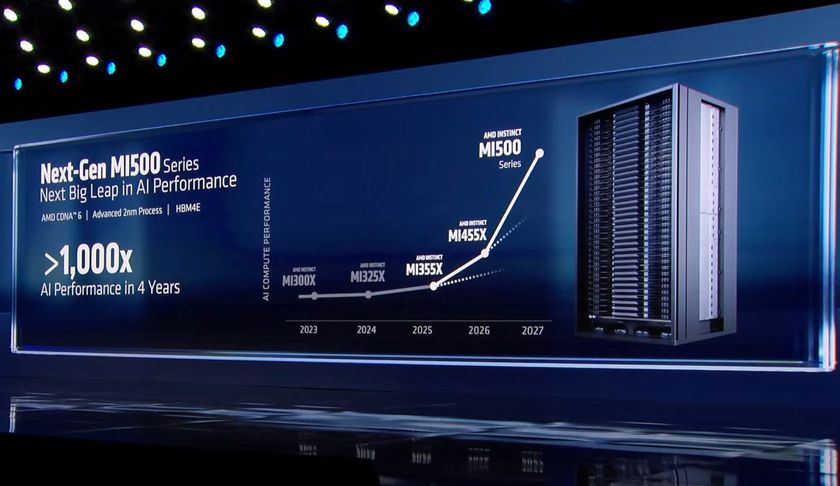

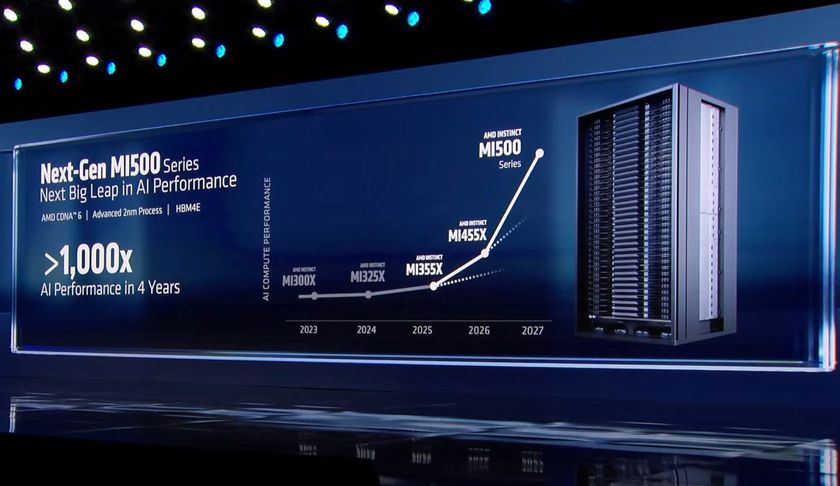

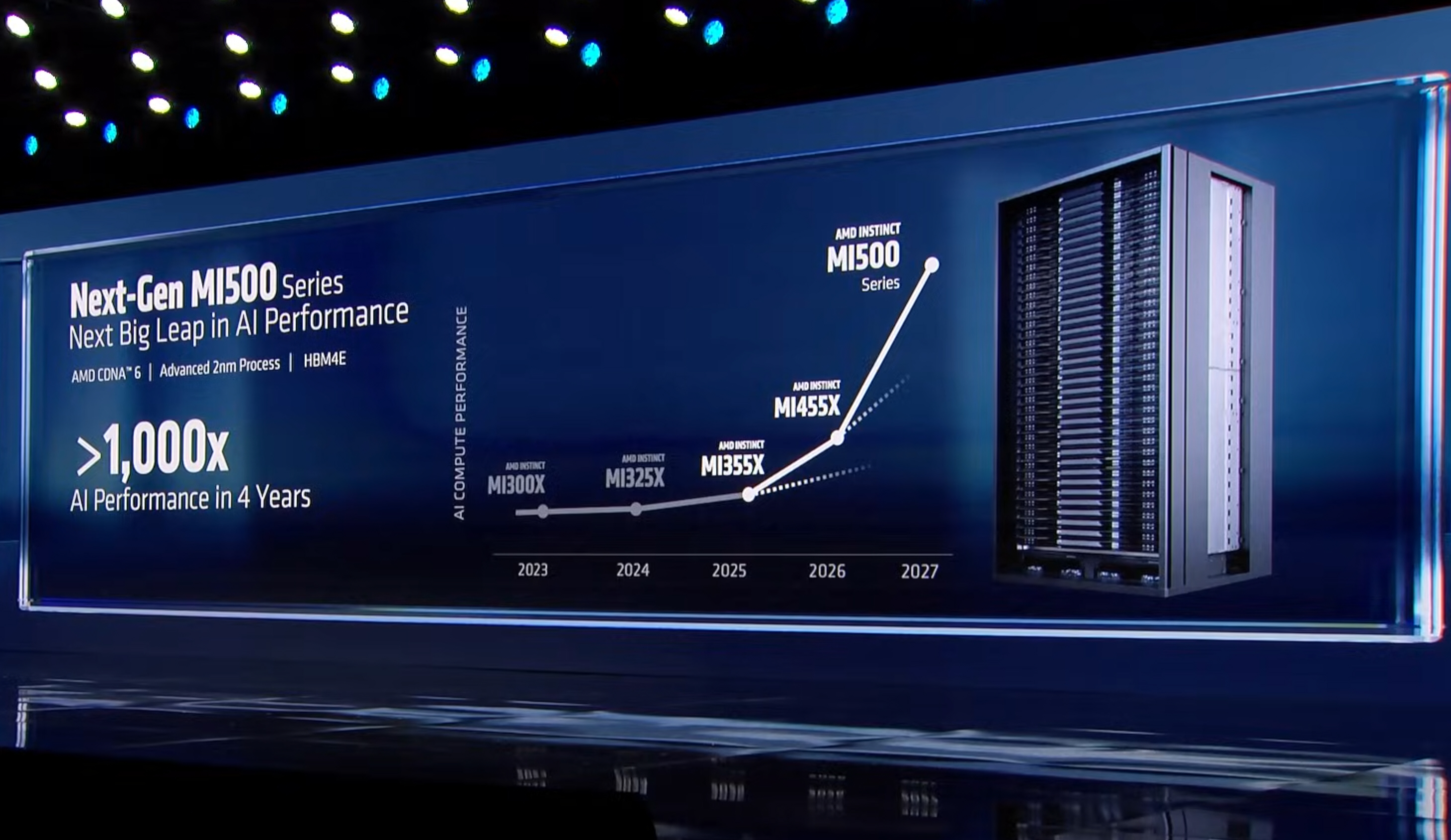

AMD says its Instinct MI500 AI Accelerator will come in 2027 — but is it too late with Nvidia set to introduce Vera-Rubin in 2026?

AMD says its Instinct MI500 AI Accelerator will come in 2027 — but is it too late with Nvidia set to introduce Vera-Rubin in 2026?

-

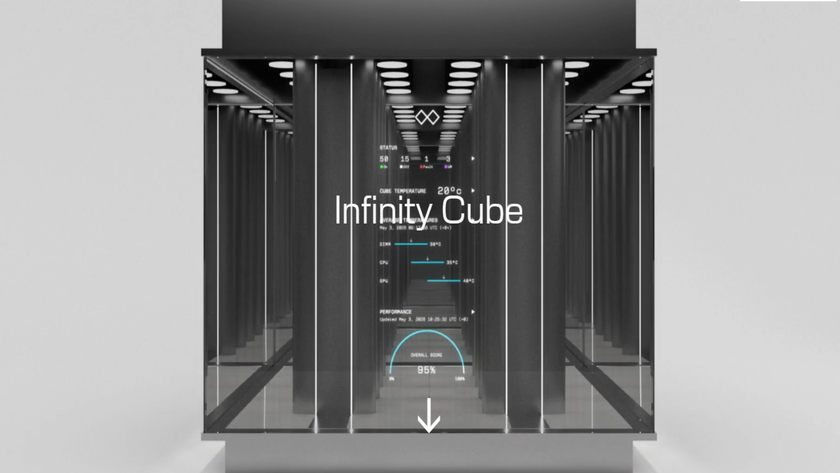

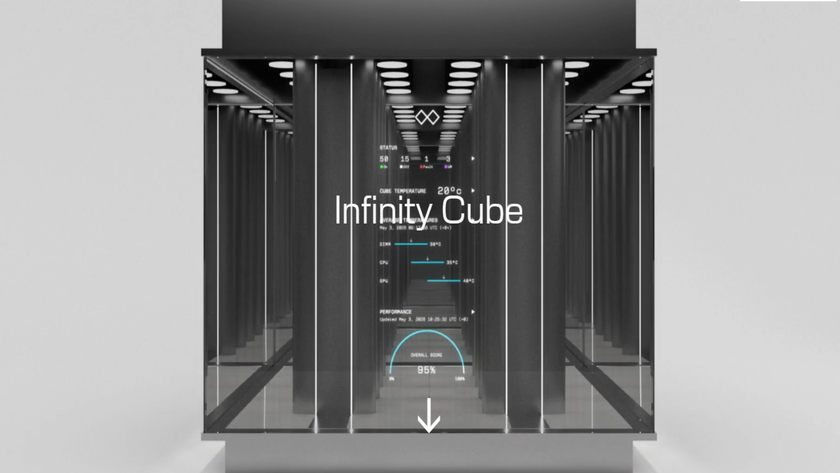

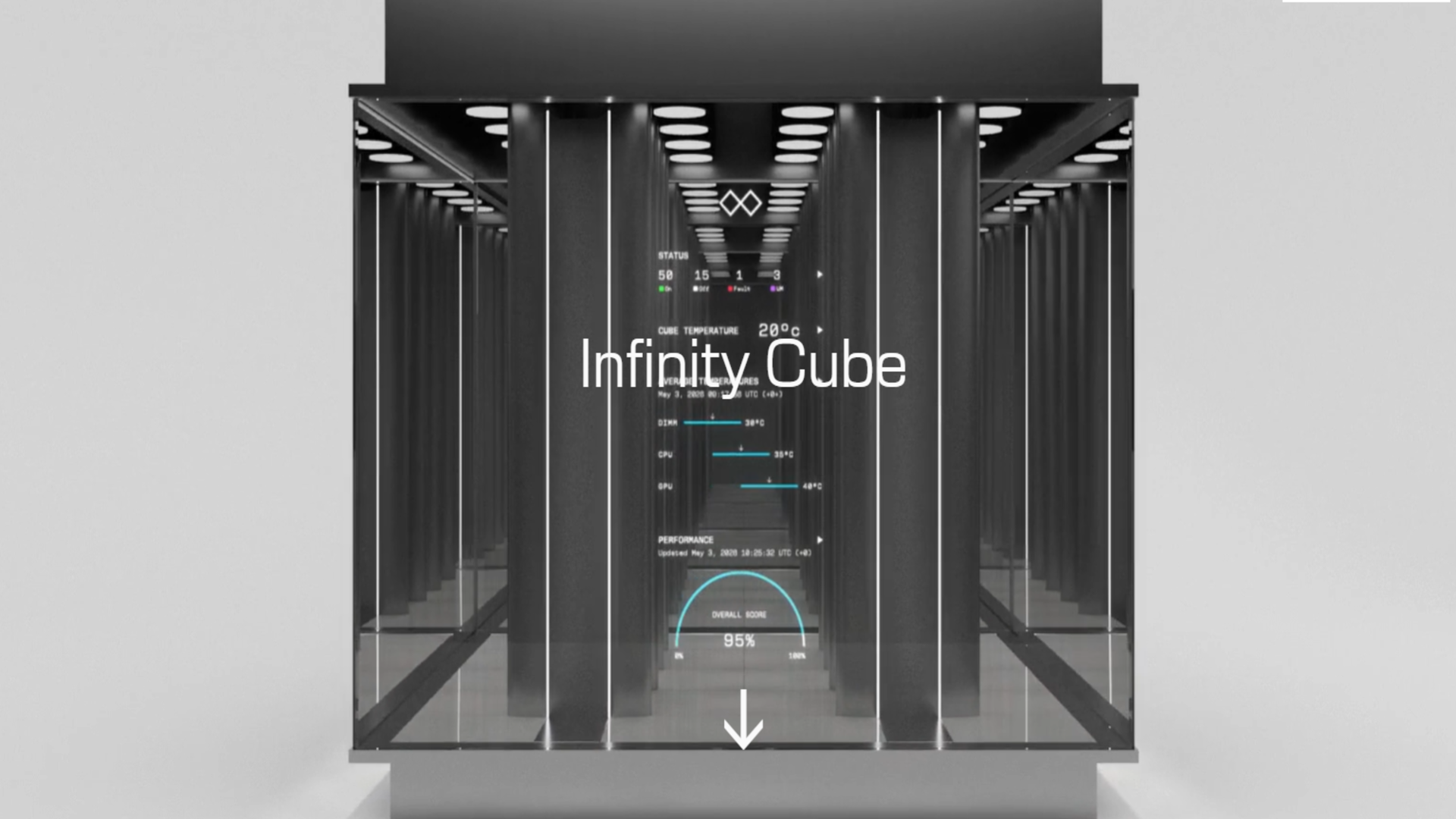

Nvidia partner wants to 'beautify' data centers with the Infinity Cube concept — plans to cram 86TB DDR5 and 224 B200 GPU in a liquid-cooled 14ft cube

Nvidia partner wants to 'beautify' data centers with the Infinity Cube concept — plans to cram 86TB DDR5 and 224 B200 GPU in a liquid-cooled 14ft cube

-

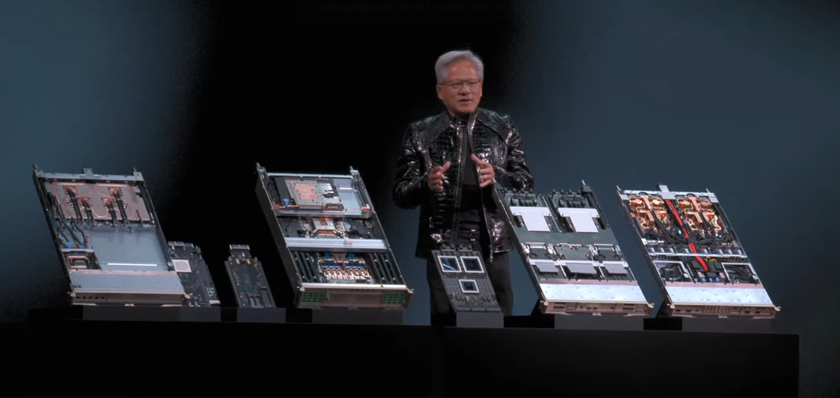

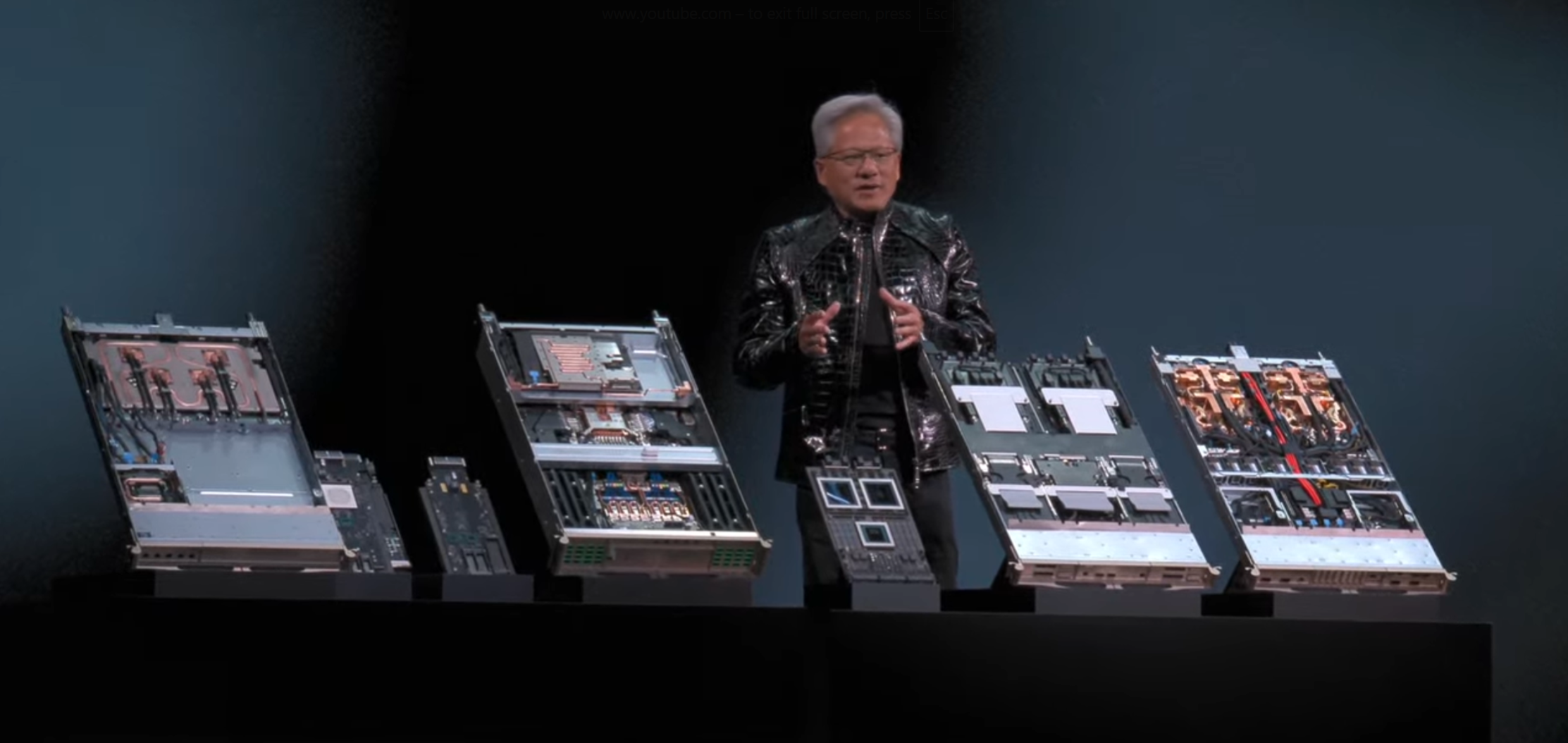

"The entire stack is being changed" - Nvidia CEO Jensen Huang looks ahead to the next generation of AI

"The entire stack is being changed" - Nvidia CEO Jensen Huang looks ahead to the next generation of AI

Rubin-based compute architecture

Each DGX Vera Rubin NVL72 system includes 36 Vera CPUs, 72 Rubin GPUs, and 18 BlueField 4 DPUs, delivering a combined FP4 performance of 50 petaflops per system.

Aggregate NVLink throughput reaches 260TB/s per rack, allowing the full memory and compute space to operate as a single coherent AI engine.

The Rubin GPU incorporates a third generation Transformer Engine and hardware accelerated compression, allowing inference and training workloads to process efficiently at scale.

Connectivity is reinforced by Spectrum-6 Ethernet switches, Quantum-X800 InfiniBand, and ConnectX-9 SuperNICs, which support deterministic high speed AI data transfer.

Are you a pro? Subscribe to our newsletterContact me with news and offers from other Future brandsReceive email from us on behalf of our trusted partners or sponsorsBy submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.Nvidia’s SuperPOD design emphasizes end to end networking performance, ensuring minimal congestion in large AI clusters.

Quantum-X800 InfiniBand delivers low latency and high throughput, while Spectrum-X Ethernet handles east west AI traffic efficiently.

Each DGX rack incorporates 600TB of fast memory, NVMe storage, and integrated AI context memory to support both training and inference pipelines.

You may like-

AMD says its Instinct MI500 AI Accelerator will come in 2027 — but is it too late with Nvidia set to introduce Vera-Rubin in 2026?

AMD says its Instinct MI500 AI Accelerator will come in 2027 — but is it too late with Nvidia set to introduce Vera-Rubin in 2026?

-

Nvidia partner wants to 'beautify' data centers with the Infinity Cube concept — plans to cram 86TB DDR5 and 224 B200 GPU in a liquid-cooled 14ft cube

Nvidia partner wants to 'beautify' data centers with the Infinity Cube concept — plans to cram 86TB DDR5 and 224 B200 GPU in a liquid-cooled 14ft cube

-

"The entire stack is being changed" - Nvidia CEO Jensen Huang looks ahead to the next generation of AI

"The entire stack is being changed" - Nvidia CEO Jensen Huang looks ahead to the next generation of AI

The Rubin platform also integrates advanced software orchestration through Nvidia Mission Control, streamlining cluster operations, automated recovery, and infrastructure management for large AI factories.

A DGX SuperPOD with 576 Rubin GPUs can achieve 28.8 Exaflops FP4, while individual NVL8 systems deliver 5.5x higher FP4 FLOPS than previous Blackwell architectures.

By comparison, Huawei’s Atlas 950 SuperPod claims 16 Exaflops FP4 per SuperPod, meaning Nvidia reaches higher efficiency per GPU and requires fewer units to achieve extreme compute levels.

Rubin based DGX clusters also use fewer nodes and cabinets than Huawei’s SuperCluster, which scales into thousands of NPUs and multiple petabytes of memory.

This performance density allows Nvidia to compete directly with Huawei’s projected compute output while limiting space, power, and interconnect overhead.

The Rubin platform unifies AI compute, networking, and software into a single stack.

Nvidia AI Enterprise software, NIM microservices, and mission critical orchestration create a cohesive environment for long context reasoning, agentic AI, and multimodal model deployment.

While Huawei scales primarily through hardware count, Nvidia emphasizes rack level efficiency and tightly integrated software controls, which may reduce operational costs for industrial scale AI workloads.

TechRadar will be extensively covering this year's CES, and will bring you all of the big announcements as they happen. Head over to our CES 2026 news page for the latest stories and our hands-on verdicts on everything from wireless TVs and foldable displays to new phones, laptops, smart home gadgets, and the latest in AI. You can also ask us a question about the show in our CES 2026 live Q&A and we’ll do our best to answer it.

And don’t forget to follow us on TikTok and WhatsApp for the latest from the CES show floor!

TOPICS Nvidia Efosa UdinmwenFreelance Journalist

Efosa UdinmwenFreelance JournalistEfosa has been writing about technology for over 7 years, initially driven by curiosity but now fueled by a strong passion for the field. He holds both a Master's and a PhD in sciences, which provided him with a solid foundation in analytical thinking.

Show More CommentsYou must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Logout Read more AMD says its Instinct MI500 AI Accelerator will come in 2027 — but is it too late with Nvidia set to introduce Vera-Rubin in 2026?

AMD says its Instinct MI500 AI Accelerator will come in 2027 — but is it too late with Nvidia set to introduce Vera-Rubin in 2026?

Nvidia partner wants to 'beautify' data centers with the Infinity Cube concept — plans to cram 86TB DDR5 and 224 B200 GPU in a liquid-cooled 14ft cube

Nvidia partner wants to 'beautify' data centers with the Infinity Cube concept — plans to cram 86TB DDR5 and 224 B200 GPU in a liquid-cooled 14ft cube

"The entire stack is being changed" - Nvidia CEO Jensen Huang looks ahead to the next generation of AI

"The entire stack is being changed" - Nvidia CEO Jensen Huang looks ahead to the next generation of AI

Oracle claims to have the largest AI supercomputer in the cloud with 16 zettaFLOPS of peak performance, 800,000 Nvidia GPUs

Oracle claims to have the largest AI supercomputer in the cloud with 16 zettaFLOPS of peak performance, 800,000 Nvidia GPUs

The AI race explodes as HPE deploys AMD’s Helios racks, crushing limits with Venice CPUs and insane GPU density

The AI race explodes as HPE deploys AMD’s Helios racks, crushing limits with Venice CPUs and insane GPU density

A secret AMD Christmas tree? Nah, it's just a close up of a very bright $3 million 72-GPU MI450 Helios rack monster at OCP Summit

Latest in Pro

A secret AMD Christmas tree? Nah, it's just a close up of a very bright $3 million 72-GPU MI450 Helios rack monster at OCP Summit

Latest in Pro

Cost of Bank of England's Oracle migration set to triple

Cost of Bank of England's Oracle migration set to triple

Why are cybercriminals getting younger?

Why are cybercriminals getting younger?

North Korean hackers using malicious QR codes in spear phishing, FBI warns

North Korean hackers using malicious QR codes in spear phishing, FBI warns

TechRadar Pro, Residential Systems, and TWICE announce CES Picks 2026 winners

TechRadar Pro, Residential Systems, and TWICE announce CES Picks 2026 winners

Record profits forecast for Samsung with dramatic increase in AI chip demand

Record profits forecast for Samsung with dramatic increase in AI chip demand

IBM's AI 'Bob' could be manipulated to download and execute malware

Latest in News

IBM's AI 'Bob' could be manipulated to download and execute malware

Latest in News

Asus primes us for integrated graphics making discrete GPUs irrelevant

Asus primes us for integrated graphics making discrete GPUs irrelevant

DJI isn't the only drone maker hit by new US laws – the world's first waterproof selfie drone could be next

DJI isn't the only drone maker hit by new US laws – the world's first waterproof selfie drone could be next

Ecovacs wants to make self-clean window-bots happen, but I don't think they're going to happen

Ecovacs wants to make self-clean window-bots happen, but I don't think they're going to happen

Cloudflare and La Liga's conflict deepens as piracy legal battle continues

Cloudflare and La Liga's conflict deepens as piracy legal battle continues

Nvidia’s next-generation RTX 60 series GPUs rumored to be on track to launch next year

Nvidia’s next-generation RTX 60 series GPUs rumored to be on track to launch next year

Garmin's closest Apple Watch Ultra rival is getting a soft gold revamp

LATEST ARTICLES

Garmin's closest Apple Watch Ultra rival is getting a soft gold revamp

LATEST ARTICLES- 1The battle of the SuperPods: Nvidia challenges Huawei with Vera Rubin powered DGX cluster that can deliver 28.8 Exaflops with only 576 GPUs

- 2Acer launches 40th Ryzen AI Max+ 395 system as it pitches Veriton RA100 mini PC as an AI workstation — but unless it is keenly priced, it will struggle against better value competitors

- 3I've looked at every Samsung Galaxy S26 price and release date rumor so far — here's what I think will happen

- 4Ecovacs wants to make self-clean window-bots happen, but I don't think they're going to happen

- 5North Korean hackers using malicious QR codes in spear phishing, FBI warns